If Google can’t see your pages, your products can’t sell.

One overlooked line in your robots.txt file can quietly block Googlebot, trigger disapprovals in Google Merchant Center, and wipe your product visibility across Google Shopping. It’s a silent killer — and it hits performance hard.

Let’s fix it — fast.

At FeedSpark, we’ve been managing product feeds and resolving Merchant Center issues for nearly as long as Google Shopping has existed. We’ve seen this crawl error time and time again — and we know exactly how to fix it – That’s why we’re sharing our knowledge with the world through our blogs.

What This Error Means (And Why It Hurts)

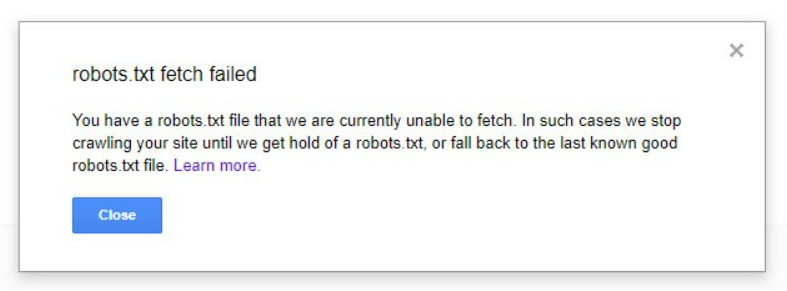

You’ll usually spot it flagged in Google Merchant Center like this:

This warning means Google can’t crawl your site properly. As a result, it can’t check your product content or landing pages. The outcome? Products may be disapproved, disqualified from Shopping ads, or stop appearing in search altogether.

In other words, there’s a visibility gap between your product feed and what Google can crawl — and that’s a direct threat to your performance.

This can also lead to related issues like Mismatched Product Availability, where Google can’t verify stock due to robot.txt crawl failures.

What’s Blocking Google?

Here are the common culprits behind crawl issues:

- Overly broad robots.txt rules that block essential resources like images or scripts

- Blocked parameters that stop product URLs with tracking (e.g.

?sku=) from being crawled - Geo or IP-based restrictions — if Googlebot is location-blocked, you’ll have issues

- CAPTCHAs or firewalls that prevent bots from accessing content

- Site migrations or CMS errors where the robots.txt file wasn’t updated properly

Together, these issues can cause disapprovals or stop new products from launching – especially risky in peak periods like Black Friday or Christmas.

How to Fix the robots.txt Issue

How to Fix the robots.txt Crawlability Issue

1. Use the Right robots.txt Structure

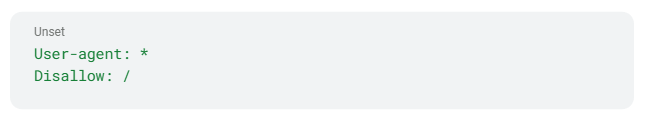

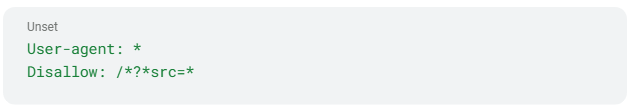

Don’t block Googlebot by accident. A bad config looks like this:

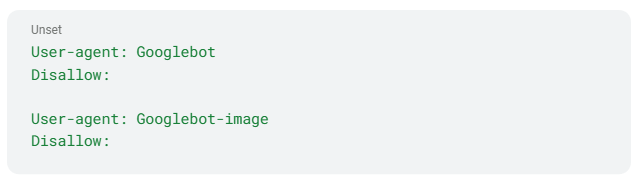

That tells all crawlers to stay away. Instead, be specific:

✅ This gives Google full access to your product pages and images — both critical for Google Merchant Center checks.

Blocked images can trigger Invalid Image warnings in Merchant Center. Read our latest blog on how to avoid this issue.

2. Review Disallowed Parameters

Sometimes, a wildcard like this sneaks in:

That will block any URL with a query string, which includes your product URLs if they use ?sku= or similar.

✅ Remove this line, or update it with care. Blocking parameters is often unnecessary for eCommerce.

3. Use Tools to Spot the Block

Validate crawl access using:

- Google Search Console’s URL Inspection Tool

- robots.txt Tester

- External tools like Robots.txt Checker

✅ Run a test on a product URL and you’ll instantly see what’s being blocked. These checks are imperative to run before every big feed update or site change — it’ll save you hours of diagnostics later.

Why It Matters (and Escalates Fast)

Here’s how fast this can snowball:

- Products get disapproved within hours

- Google Shopping ads stop serving

- Sales drop while you wait 24–48 hours for Google’s next crawl

- You lose impression share to better-structured competitors

Ultimately, it’s a preventable performance bottleneck — if you catch it early.

How FeedSpark Helps

For our fully managed agency brands, while we don’t manage robots.txt file directly, we do spot issues before they hit performance as we;

- Run daily audits and flag crawl blocks

- Uncover why products aren’t surfacing, and where disapprovals are coming from

- Give tech teams the exact fixes for the robots.txt file

- Keep Shopping performance watertight with proactive monitoring

Final Thoughts: Don’t Let Robots.txt Tank Your ROAS

Check Google’s full guidance on robots.txt issues to make sure your listings meet every spec.

Your robots.txt file might seem small, but if it blocks Googlebot, it blocks revenue. Make it part of your pre-launch QA and ongoing feed management strategy.

Need help with your feed management strategy? Contact Feedspark and we’ll help you stay Google compliant, visible, and fully optimised.

Categories

- Google Merchant Centre Help (5)

- Google PMAX (1)

- Google Vehicle Ads (1)

About The Author: Zoe Bates

More posts by Zoe Bates